Proxmox ZFS Performance Tuning

Proxmox is a great open source alternative to VMware ESXi. ZFS is a wonderful alternative to expensive hardware RAID solutions, and is flexible and reliable. However, if you spin up a new Proxmox hypervisor you may find that your VM's lock up under heavy IO load to your ZFS storage subsystem. Here a dozen tips to help resolve this problem.

-

Ensure that the 'ashift' parameter is set correctly for each of your pools. Generally speaking, ashift should be set as follows:

- ashift=9 for older HDD's with 512b sectors

- ashift=12 for newer HDD's with 4k sectors

- ashift=13 for SSD's with 8k sectors

If you're unsure about the sector size of your drives, you can consult the list of drive ID's and sector sizes hard coded into ZFS. If you're still unsure, it's better to set a higher ashift than a lower one. Note that ashift can only be set when creating a vdev, and cannot be modified once a vdev is created. You can see what ashift is set to for an existing pool by running

sudo zdb. -

Avoid raidz pools for running VM's. Use mirror vdevs instead. The write amplification associated with raidz1+ vdevs will kill performance.

-

Create your ZFS vdevs from whole disks, not partitions.

sudo zdbwill showwhole_disk: 1if you properly assign a whole disk (rather than a partition) to a vdev. -

Enable lz4 compression on your pools.

sudo zfs set compression=lz4 $POOL. lz4 is fast and you're likely IO bound, not CPU bound, so enabling compression will likely be a net win for performance. Note that you can disable compression on individual datasets that store incompressible data. -

Disable atime on your pools.

sudo zfs set atime=disabled $POOL. -

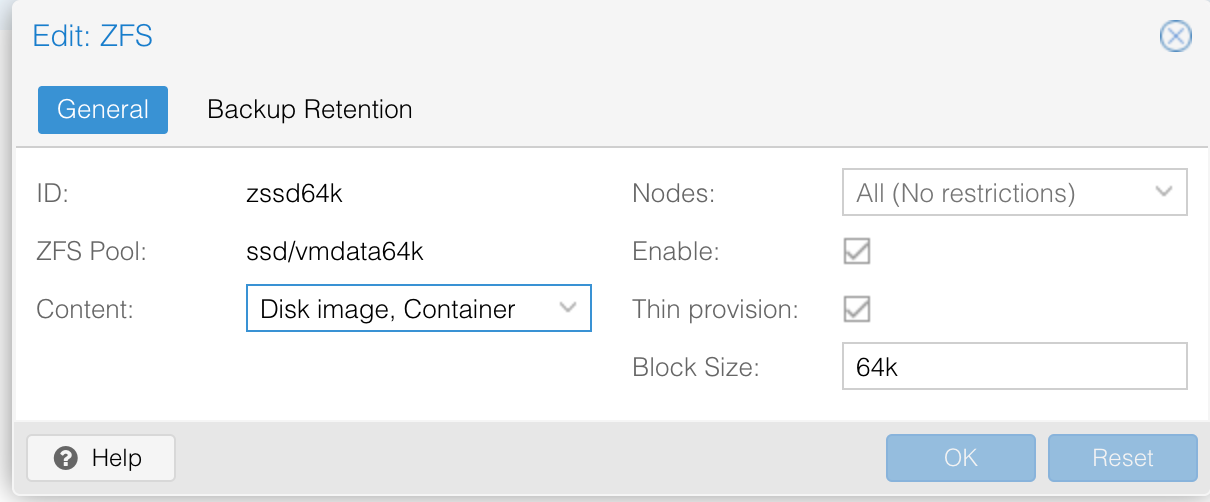

Consider setting a higher volblocksize for specific workloads. The default "recordsize" in ZFS is 128K, but Proxmox by default uses an 8K "volblocksize" for zvols. Setting this higher did more than anything else to reduce hypervisor and guest lockup during heavy IO. I recommend experimenting with values between 16k and 64k to find what works best for your system. Note that "volblocksize" is set by Proxmox each time a zvol is created (usually when a VM is created), and cannot be modified after a zvol is created. The "volblocksize" is configurable in the Proxmox UI from 'Datacenter -> Storage -> $ID -> Edit -> Block Size"

-

If your workload is doing a lot of synchronous writes, you'll likely want to add an (enterprise grade) SSD to cache the ZIL. This can be added to a pool as follows:

sudo zpool add $POOL log $SSD_DEVICEegsudo zpool add spinningdisks log /dev/nvme0n1p4. If you do alot of synchronous writes, but can't afford an enterprise SSD, and you like to live dangerously, you can disable sync on a per pool or per dataset basis egsudo zfs set sync=disabled $POOL/$DATASET -

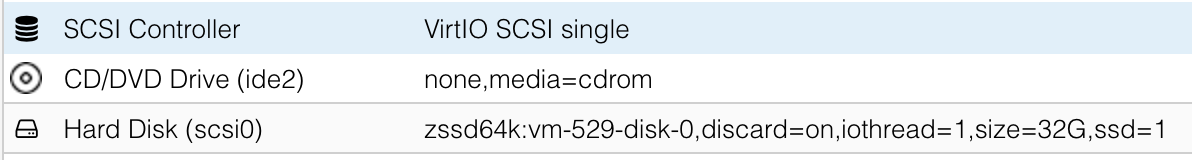

Configure your VM's to use 'SCSI Controller: VirtIO SCSI Single'. Enable discard and iothread.

-

Inside your guest OS, enable trim. For example, on Debian 10:

sudo systemctl enable fstrim.timer. This will discard unused blocks once a week, freeing up space on the underlying ZFS filesystem. Note that this requires that the 'discard=1' option is set on the disk per step 8 above. -

Beware that since ZFS is a copy on write filesystem, writes will most definitely get slower as the pool becomes more and more full (and fragmented). If a pool gets more than 70% full, and writes become noticably slower, you can remedy this only by moving the data to another pool, deleting and recreating the pool, and moving the data back.

-

ZFS replication is your friend, and can help you create a hyper converged infrastructure, seamlessly migrating workloads between nodes without the need for shared storage. More on that in a subsequent post.

Credit to Martin Heiland for his comprehensive writeup on ZFS tuning for VM workloads from a few years back.